By Adrian Trzek.OcT 20, 2022

I had the chance to use Datform (Open Source Cli version) until years ago, while I made an analysis solution for a retailer. At that time I finally finished the most important functionality as a Rest API in Go and used cloud planner to call up the needy transformations based on tags. Each part of that solution was used on GCP with Terraft. Well, Google has chosen almost the same approach with cloudwork flows as the wrapper around Cloudplanner.

In the next ’22, Sems such as Google releases preview of data form. For that, Dataform Eithher was only available as an open source Cli version or – if you were early to the party – as a web application with beautiful dependency charts and the like.

Why data form?

Why would you consider using data? The price for cloud storage has fallen considerably for years, making unprocessed data affordable to save, which was the transition in a transition from a good old ETL (where transformation would happen For The tax) to brand new ELT (where transformations are applied after the data is loaded). Data transformations play a crucial role for many data -driven organizations. DataForm offers you the following functionality that you can view:

- Everything, from table definitions, views to the SQL of Transformations are stored in SCM

- Transformations can be validated with claims

- Inrementary Tabelunctity ensures that only the rows that have been added since the last Transformion are processed (requires a good distribution of source and destination stables to minimize costs).

- JS -Snippetts can be used to reuse cakes of code that you

- Possibility to separate the environment by using variables (although it should not be assumed in the consolew in preview).

Folder structure

Since the DATAFOR project can grow quickly in size, my suggestion is to start with the right folder structure:

definitionsfolder is where you cansqlxFiles that must be split upreportingWhere the data form made tables and views are defined andsourcesWhere you refer to Bigquery -Datastasts and Tables to get the data.includesFolder is where you save the JavaScript files for reusable SQL blocks. From the experience that you may make transformations for a different purpose.

Google Cloud Set-Up

Eleven you have your Git repo with the right folder structure, modest sources and tables or views that you have to do, you have to follow:

- Enlist

dataform.googleapis.comFor the project responsible for implementing data compilations - Containing that API will offer you a service account in the format

service-{PROJECT_NUMBER}@gcp-sa-dataform.iam.gserviceaccount.comthat will perform the operations. Make sure you allow the necessary authorizations of the Service Account to create and read the source tables in corresponding data sets. - To give a teazable git integration, topping of personal access is required – stored in secret manager. Make sure that the aforementioned service contact has the necessary permissions to access the secret version.

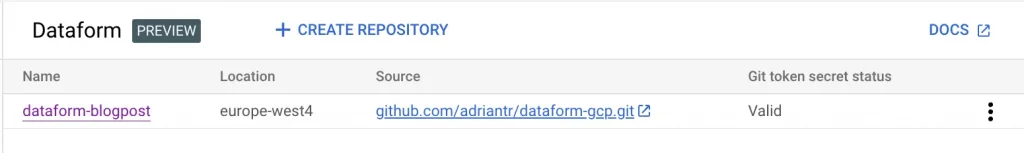

The dashboard -dashboard must be low as soon as they are connected to a git repository:

When you press the name of the repository, the possibility of creating a development work space appears. Here is a definition of the workspace of the Un development:

A development work space is an editable copy of the files with a git repository. With development work spaces you can follow a GIT-based development process in data form. Processes that you make on files in AevsPace, start as non -committed changes that you can make and then push.

In our case, because all the code is already in Github, we will simply be the content of the main Branch in our newly created workspace.

Data form setting

There are two files that are needed to be in the repo for data working.

First of all dataform.json File including at least next configuration:

This in fact tells you that we do our transformations in Bigquery, Project kubectl-blogposts . Standard data set is dataform And we are planning to create the sources in the US Location

Another file needed to make the data form work correctly, is the package.json Generated by running npm i @dataform/core.

Data data data

For the purpose of this article I opted for the publicly available IMDB data set (you can in the Bigquery analysis hub and add this to your project). In this self -study we make the display that a user and analysis -friendly table contains information about film, release year, directors, actors, average rating and number of reviews.

We first start with the defense after sources:

definitions/sources/name_basics.sqlxContain to map the ID of the person to the current namedefinitions/sources/title_basics.sqlxGeneral contains information about the titledefinitions/sources/title_crew.sqlxContain information about who directed the filmdefinitions/sources/title_principals.sqlxReplace actor informationdefinitions/sources/title_ratings.sqlxContainer information about the assessment

A typical Define SQLX file contains:

declarationTypeschemaOr Dataset In Bigquery TressnameName of the Brontabel

The directors

The directors for every film live in a field divorced by Comma inside title_crew Table This gives us the opportunity to create a display definitions/reporting/directors.sqlx That we can refer late in the end product. Writing the SQL, it is now positive to refer title_crew Table using ref Syntax: ${ ref('title_principals').

Data form will convert the reference to the hood under the hood to project_id.dataset_name.table_name. Our representation of our directors will make the accession and the directors Column title_crew Table and give us a representation that is easier to work with.

Put all the way together

Since this article is not about explaining the SQL, DataForm will make definitions/reporting/movies.sqlx View the end result based on the following code:

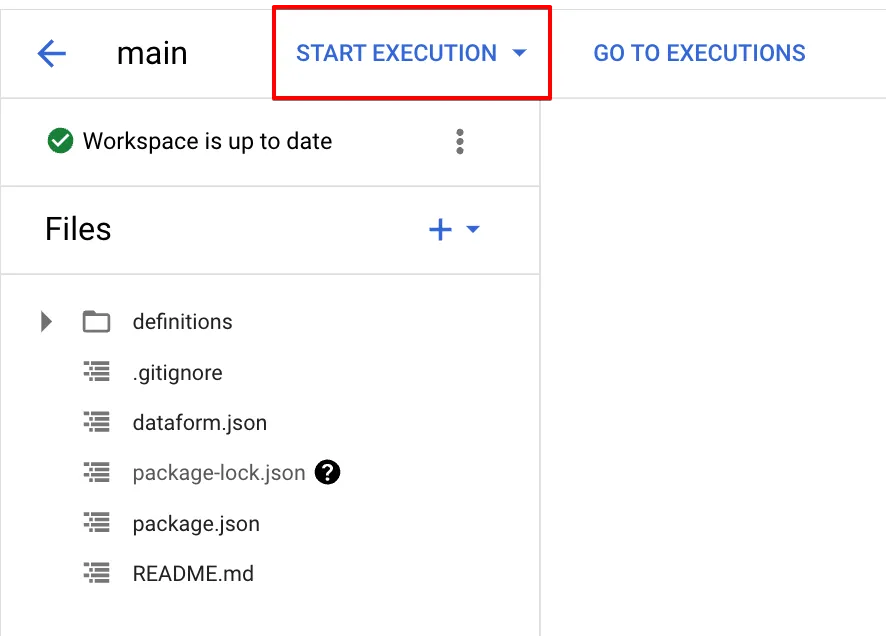

ELF is the code of the main branch in the workspace of the Unbevenspace interviewed, you feel free to perform all actions (which in fact means that Dataform will create all sources, regardless of which tags they are assigned):

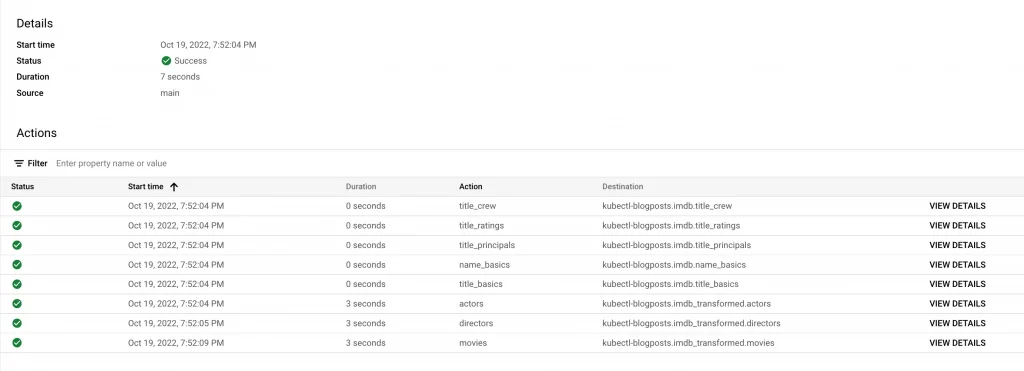

If you inspect the implementation, you will notice that everything .sqlx Files have been processed to a Destión. For each table/display/definition it is positive to see the details and generated SQL code.

Dataform was the tool that fueled the curiosity of my data engineering and I will admit that it is great to see that it is now part of Google Cloud. Although still a bit hidden and a capolized, data form under oher Things that the right release pipelines for your data engineers.

Feel free to send me a question if you have one! Link to the repo.

The original article published on medium.